Long time no see...

Hi again, been a long time since i've written a Blog post and a lot of things have happened. I'm know working at Rebellion as a Junior Audio Designer which is pretty sweet. Part of this change however meant making the leap between DAWs from Pro Tools to Reaper, which I've totally fallen in love with and wish i had switched sooner, it's phenomenal. As part of my transition i've had to work out ways to replicate my old workflow. Here's how I have managed to use Snapshots in Reaper and have them triggered through the Markers of the timeline, allowing for easy mixing of multiple sounds of the same type that perhaps share the same effects chains or elements, but have slight mix tweaks from each one.

A few people in the Gameaudio Slack group were interested in this, so I decided to do a quick writeup.

1.) Setting up Snapshots

So first thing you're going to need to do is install SWS extensions which I would strongly recommend every Reaper user does as it gives countless extra actions that I find useful every day. One of these includes that snapshot automation feature. You can pull up the window for this by finding "Open Snapshots Window" in your actions menu.

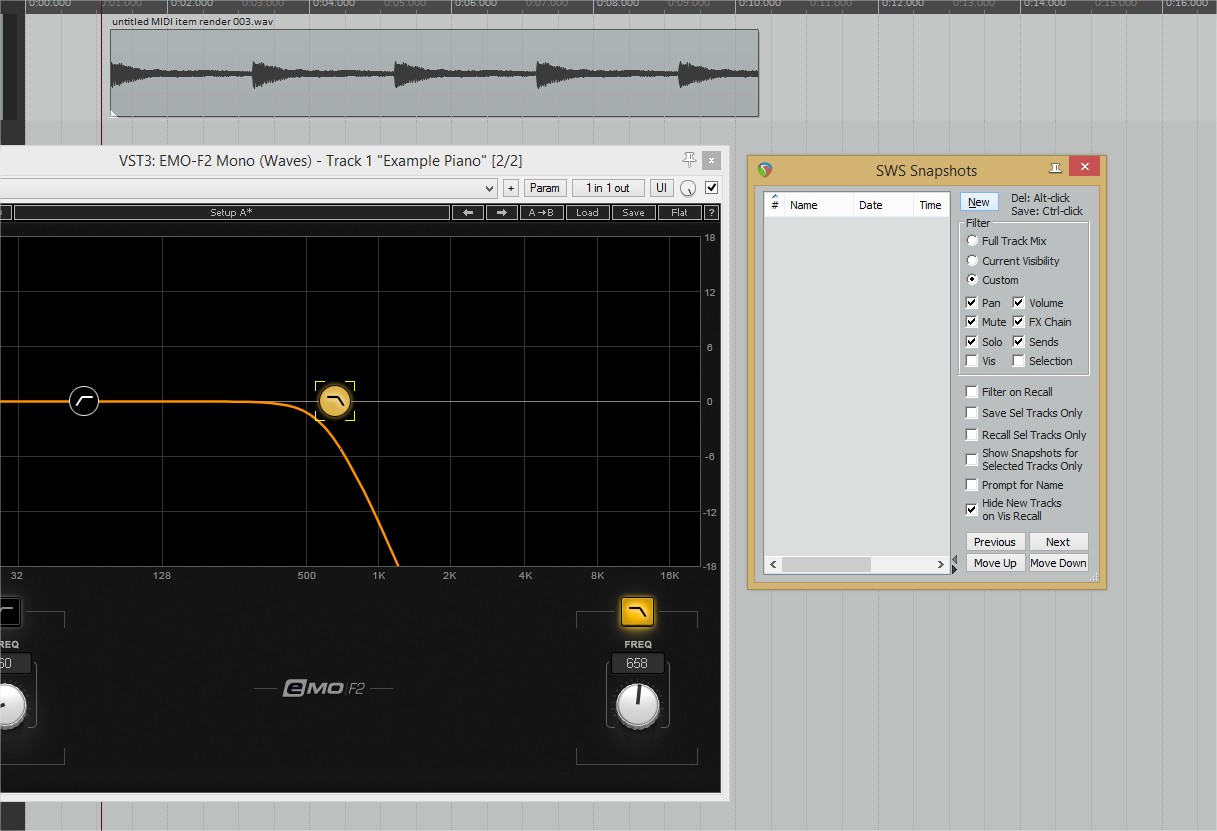

Once you do that you'll be presented with the following window;

From here, simply make your first mix, and then hit the New button. Before doing this you can also decide how much or how little information is going to be stored by using the tick boxes below the New button. With your first mix snapshot saved you can make any alterations safe in the knowledge that the original mix can be recalled at a moments notice.

From here you can make as many mixes as you need, saving each one sequentially.

Mix 1

Mix 2

This in itself is incredibly useful to A/B ideas as well as keep mixes for several different sounds in one project...but what if we could Automate their recall?

2.) Recalling Snapshots using Markers

Markers are a fairly standard feature of Reaper, but in case you've not come across them they simply place a mark on the timeline that is easy to see as well as jump to. They are also secretly very powerful as you can make any action be triggered from a marker by placing name of that command in the name of the marker.

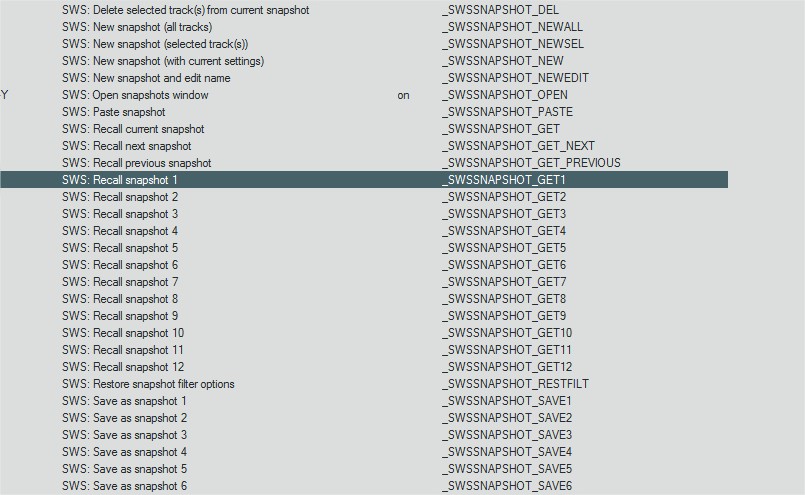

So the first thing to do would be get the Command ID for the action we want to do, in our case that would be the action that recalls Snapshot 1, it's reference being "_SWSSNAPSHOT_GET1". You can find these on every single action in the action list.

Now we simply double click our marker and paste that Command ID into the name of it, putting a "!" symbol at the start of it.

Now whenever the playhead passes the marker in the timeline the snapshot assigned to it will load in automatically.

Thanks for read and I hope that helped! Feel free to comment or get in touch if you have any questions or just want to talk Game Audio.

Haroon

UPDATE: One thing I forgot to mention in this is that this method currently DOES NOT work for standard offline renders. Hopefully this will get fixed soon!